The dawning of the new millennium marked a turning point in human history, unveiling unexpected lessons that reverberate even today. A unique episode, characterized by the convergence of intricate systems and software glitches, captivated the world’s attention. As we traverse the annals of time, it becomes apparent that comprehension of the past is vital for shaping a brighter future.

In the not-so-distant past, an anomalous bug, originating from the blending of calendars and the ticking digital clock, highlighted the vulnerability of modern systems. Although shrouded in mystery and alarm at its onset, this seemingly innocuous Y2K incident left an indelible mark on the canvas of technological advancements. It serves as a reminder that even the seemingly minor facets of our intricate digital ecosystem can have far-reaching consequences.

Revolutionize Your Health & Lifestyle!

Dive into the world of Ketogenic Diet. Learn how to lose weight effectively while enjoying your meals. It's not just a diet; it's a lifestyle change.

Learn MoreStepping back from the technical intricacies that surrounded the Y2K event, a collective realization dawned upon society. It became evident that the interdependencies among various technological facets could inadvertently create havoc on a global scale. The aftermath of the Y2K bug underscored the paramount importance of comprehensive risk assessment, proactive measures, and redundancies in technological systems.

- Understanding the Impact of the Y2K Bug

- Uncovering the Vulnerabilities

- The Worldwide Panic and Economic Consequences

- Key Factors in Preventing Technological Disasters

- Effective Risk Assessment and Management

- Investing in Regular System Updates and Maintenance

- Implementing Robust Quality Assurance Processes

- The Role of Government and Industry Regulations

- Enforcing Compliance and Accountability

- Cross-Sector Collaboration for Global Solutions

- Building Resilient Technological Infrastructure

- Investing in Redundancy and Back-up Systems

- Questions and answers

Understanding the Impact of the Y2K Bug

The Y2K bug, also known as the Millennium Bug, was a technological issue that had a significant impact on various aspects of society at the turn of the millennium. This bug was related to the way computer systems handled dates, particularly the transition from the 20th to the 21st century.

During the late 1990s, as the new millennium approached, concerns began to rise regarding the potential effects of the Y2K bug. Many computer systems at that time used a two-digit code to represent years, which meant that the year 2000 would be represented as 00. This led to concerns that computer systems would interpret the year 2000 as 1900, resulting in widespread errors and malfunctions.

The impact of the Y2K bug was far-reaching, affecting industries such as finance, manufacturing, transportation, and even everyday conveniences like utilities and telecommunications. Organizations worldwide recognized the potential risks and invested significant resources into fixing and mitigating the bug’s effects.

- In the financial sector, the Y2K bug had the potential to disrupt stock markets, banking systems, and financial transactions. Extensive efforts were made to ensure that financial systems were Y2K compliant to avoid any catastrophic consequences.

- In the manufacturing industry, the bug posed risks to production lines and supply chains. Companies had to assess and update their systems to prevent production delays and ensure smooth operations during the transition.

- The transportation sector faced challenges in maintaining the functionality of critical infrastructure, such as air traffic control systems and railway networks. Y2K remediation measures were necessary to prevent disruptions in travel and logistics.

- Utilities, including electricity and water supply, also needed to address the Y2K bug to ensure uninterrupted services. Failure to do so could have led to widespread power outages and water shortages.

- Even telecommunications systems were at risk, as outdated software and hardware could have caused disruptions in communication networks. Y2K compliance measures were implemented to minimize the potential impact on global communication.

The Y2K bug serves as a reminder of the importance of thorough system testing and regular updates to prevent technological disasters. The concerted efforts made to address the bug’s potential consequences highlight the need for proactive measures and constant vigilance in the face of evolving technological challenges.

Uncovering the Vulnerabilities

In this segment, we delve into the exploration of the inherent weaknesses within technological systems, shedding light on the factors that contribute to potential disasters. By understanding the vulnerabilities that exist, we can take proactive measures to ensure the prevention of future technological catastrophes.

First and foremost, it is imperative to acknowledge the intricacies of complex technological infrastructures. These intricate networks, composed of various interconnected components, can create a breeding ground for vulnerabilities. It becomes vital to identify the weak links that may be exploited, leading to system failures or compromises in security.

Furthermore, an exploration of human fallibility must be undertaken to comprehend the impact of human error on technological systems. Human involvement plays a significant role in the development and maintenance of these systems and, consequently, can introduce vulnerabilities. Understanding the potential areas where mistakes can occur allows us to implement safeguards that mitigate the likelihood of disasters.

Additionally, the examination of external influences on technological systems uncovers vulnerabilities that may arise from external factors such as environmental conditions or malicious attacks. These external factors can disrupt the functionality and stability of technological systems. By studying these vulnerabilities, we can design resilient systems that can withstand external pressures, ensuring the continuity of operations even under challenging circumstances.

Moreover, an analysis of the software and hardware components is necessary to uncover the vulnerabilities that may exist within the intricate layers of technological systems. Flaws in code implementation, outdated software, or inadequate hardware configurations can act as potential entry points for disaster. By addressing these vulnerabilities through diligent software updates and implementing robust hardware standards, we take significant strides towards disaster prevention.

- Understanding the intricacies of complex technological infrastructures

- Exploring the impact of human fallibility on technological systems

- Examining vulnerabilities arising from external influences

- Analyzing software and hardware components for potential weaknesses

By embarking on a comprehensive investigation into these vulnerabilities, we can fortify our technological systems, ensuring that future disasters are minimized, if not altogether prevented. Through proactive measures and a deep understanding of the potential risks, we pave the way for a technologically advanced future that is safeguarded against catastrophic events.

The Worldwide Panic and Economic Consequences

The impact of the Y2K bug went far beyond mere technical glitches and coding flaws. It sparked a global sense of fear and uncertainty, leading to widespread panic and dire economic consequences. The magnitude of the potential disaster was blown out of proportion, and the resulting chaos disrupted industries and economies worldwide.

Once news of the Y2K bug spread, individuals, businesses, and government entities around the world found themselves grappling with the uncertain implications of the impending millennium rollover. Many feared that essential systems and infrastructures would fail, causing widespread blackouts, financial collapse, and even compromising public safety.

The panic that ensued forced organizations to allocate significant resources towards Y2K remediation efforts, diverting finances and personnel from other critical projects. This diversion of funds and manpower had a substantial economic impact, as budgets were strained and development plans were put on hold. The fear-induced spending frenzy created an atmosphere of uncertainty within the global market, leading to reduced investments and slowed economic growth.

- Stock markets experienced heightened volatility as investors hesitated to commit funds.

- Consumer confidence plummeted as people tightened their belts and postponed major purchases.

- Government budgets were stretched thin as emergency funds were allocated for Y2K preparedness.

- Industries reliant on technology faced significant setbacks as they diverted resources towards bug fixes.

Moreover, the panic surrounding the Y2K bug affected international trade and collaboration. Companies were hesitant to enter into new contracts or partnerships, fearing the unknown consequences of the bug. This hesitancy caused disruptions in supply chains, leading to delays in production and delivery of goods and services.

While the Y2K bug itself did not cause the catastrophic disasters that were feared, the panic it generated had enduring economic repercussions. The experience serves as a reminder of the importance of careful risk assessment, effective crisis management, and transparent communication to avoid unnecessary panic and mitigate the economic fallout from technological uncertainties in the future.

Key Factors in Preventing Technological Disasters

In the realm of technology, mitigating the risks of catastrophes and ensuring a smooth operation is of utmost importance. To achieve this, several crucial factors must be considered and implemented.

First, establishing a robust and comprehensive risk assessment framework is vital. This framework should encompass a thorough examination of potential vulnerabilities, weaknesses, and threats within the technological systems. By identifying potential risks, organizations can proactively strategize and implement preventive measures.

Another key factor is the constant upgrading and maintenance of technological infrastructure. Regular audits and evaluations of hardware, software, and networks can help identify and rectify any vulnerabilities or outdated components. Continuous monitoring and timely patching of security loopholes are essential to prevent disasters caused by malicious actors or system failures.

Furthermore, fostering a culture of collaboration and accountability within the organization is crucial. This involves promoting a work environment where employees are encouraged to report any potential issues or vulnerabilities they come across. Conducting regular training sessions on best practices for technology usage and emphasizing the importance of adhering to security protocols can significantly reduce the risk of disasters.

Additionally, integrating redundancy and failsafe mechanisms into technological systems is paramount. This can involve implementing backup servers, data replication, and redundancy measures to ensure that critical systems can seamlessly switch over in the event of a failure. Regular testing and simulation exercises can help identify and fix any gaps in system redundancy and recovery procedures.

Last but not least, establishing a robust crisis management plan is essential. This plan should include clear protocols and communication channels to effectively handle and respond to any technological disasters that may occur. Regular drills and rehearsals of the crisis management plan can help ensure that all stakeholders are prepared to act swiftly and effectively in the face of unforeseen circumstances.

By considering and implementing these key factors in preventing technological disasters, organizations can minimize the risks and impacts of potential catastrophes, ensuring the smooth operation of their technological systems and safeguarding their critical assets.

Effective Risk Assessment and Management

In order to avoid potential future calamities caused by technology failures, it is crucial to implement effective risk assessment and management strategies. By thoroughly evaluating possible risks and employing appropriate measures, organizations can mitigate the chances of technology-related disasters and ensure the smooth operation of their systems.

The process of risk assessment involves identifying and analyzing potential hazards that may adversely impact technological systems. It entails carefully examining various factors, such as vulnerabilities, threats, and weaknesses, to determine the likelihood and potential consequences of a failure. Through this assessment, organizations gain a comprehensive understanding of the risks they face, providing a solid foundation for effective risk management.

Effective risk management is essential in shielding organizations from potential disasters. This involves developing strategies and implementing safeguards that minimize the impact of identified risks. By employing a proactive approach, organizations can formulate contingency plans, implement robust security measures, and establish redundancy systems. This way, they can prevent and mitigate the effects of technological failures, ensuring minimal disruption to operations.

Constant monitoring and reassessment are key components of effective risk management. Technological systems evolve rapidly, and with it, new risks may emerge. Regularly reviewing risk assessments and adapting strategies accordingly ensures that organizations stay ahead of potential threats. This proactive approach enables them to identify and address vulnerabilities in a timely manner, enhancing the overall resilience of their technological infrastructure.

In conclusion, effective risk assessment and management play a vital role in preventing future technological disasters. By thoroughly evaluating risks, developing robust strategies, and constantly reassessing vulnerabilities, organizations can ensure the reliability and security of their technology systems. Implementing these practices safeguards against potential failures and promotes seamless operations, bolstering overall efficiency and productivity.

Investing in Regular System Updates and Maintenance

One crucial aspect of maintaining a technologically advanced system is to invest in regular updates and maintenance. By regularly updating and maintaining our systems, we can ensure their efficient and secure operation, reducing the risk of future technological disasters.

Regular updates involve installing the latest software patches, security updates, and bug fixes provided by the system developers. These updates address vulnerabilities and enhance the system’s performance, stability, and security. Neglecting to install updates could leave the system exposed to potential threats, as hackers and malicious individuals continuously look for vulnerabilities to exploit.

Maintenance, on the other hand, involves routine checks, repairs, and optimization of the system’s hardware components and software configuration. It includes tasks such as cleaning hardware components, optimizing storage space, conducting diagnostic tests, and ensuring all dependencies and drivers are up to date. Through regular maintenance, potential issues can be identified and resolved before they escalate into larger problems that could disrupt operations.

Investing in regular updates and maintenance not only helps prevent future technological disasters but also enhances productivity and efficiency. By keeping our systems up to date and well-maintained, we can ensure uninterrupted workflow, minimize downtime, and avoid costly repairs. Moreover, it allows us to adapt to the ever-evolving technological landscape, incorporating new features and functionalities to stay ahead of the competition.

| Benefits of Investing in Regular System Updates and Maintenance: | |

|---|---|

| Enhanced security: | Regular updates help patch security vulnerabilities, protecting the system from potential threats. |

| Improved performance: | Updates and maintenance optimize system resources, leading to faster and more efficient operation. |

| Reduced downtime: | By addressing potential issues proactively, regular maintenance helps avoid unexpected system failures and downtime. |

| Increased longevity: | Well-maintained systems are likely to have a longer lifespan, reducing the need for frequent replacements. |

| Improved competitiveness: | Staying up to date with the latest technology through updates and maintenance allows businesses to remain competitive by leveraging new features and functionalities. |

In conclusion, investing in regular system updates and maintenance is a critical step in preventing future technological disasters. By staying proactive in keeping our systems updated and well-maintained, we can ensure their security, performance, and longevity, while also gaining a competitive edge in the ever-changing technological landscape.

Implementing Robust Quality Assurance Processes

Creating and maintaining a strong quality assurance (QA) system is essential for avoiding potential technological disasters and ensuring the smooth functioning of systems and applications. Effective QA processes involve rigorous testing, continuous monitoring, and thorough evaluation of software and hardware components.

One crucial aspect of implementing robust QA processes is conducting comprehensive testing at every stage of development. This includes unit testing, integration testing, and system testing, among others. By conducting thorough testing, software engineers can identify and rectify potential flaws and vulnerabilities before they become major issues.

Additionally, it is vital to establish clear quality standards and guidelines that all team members should adhere to. This ensures consistency in the development process and helps prevent common mistakes and errors. QA teams should also document the best practices and lessons learned from previous experiences to serve as a reference for future projects.

A proactive approach to QA involves continuous monitoring and analysis of system performance. Through the use of automated monitoring tools and regular inspections, potential problems can be identified and addressed promptly. This allows for timely remediation and minimizes the risk of failures or disruptions.

Regular audits and reviews of the QA processes are also crucial to ensure their effectiveness and identify areas for improvement. Evaluating the performance of the QA team, analyzing the efficiency of testing methodologies, and addressing any bottlenecks or inefficiencies are essential for maintaining a robust QA system.

Lastly, effective communication and collaboration among team members are vital for successful QA implementation. Having a dedicated QA team that works closely with developers, project managers, and stakeholders can help facilitate efficient bug tracking, issue resolution, and overall improvement of the development process.

In conclusion, implementing robust QA processes is indispensable for preventing technological disasters and enhancing overall system reliability. By conducting thorough testing, setting clear quality standards, monitoring system performance, conducting regular audits, and fostering effective communication, organizations can significantly reduce the likelihood of future technological failures.

The Role of Government and Industry Regulations

Regulation and oversight play a crucial role in ensuring the safety and reliability of technological systems. In order to prevent potential disasters, both governments and industry organizations must establish comprehensive guidelines and enforce appropriate regulations. These regulations serve as a safeguard against potential failures, ensuring the continued functionality and security of technological infrastructure.

Government intervention

The government plays a vital role in setting standards and guidelines that help mitigate the risks associated with technological advancements. From drafting regulations to monitoring compliance, government agencies work to ensure that industries adhere to established safety protocols. By establishing laws and regulations, governments can enforce accountability and promote responsible behavior among companies operating within the technological landscape.

The government’s role also extends to creating a framework for collaboration between industries. Through initiatives such as industry task forces and regulatory bodies, governments facilitate information sharing and promote best practices. This collaboration not only enhances the overall safety of technological systems but also fosters innovation and continuous improvement within industries.

Importance of industry regulations

Industry regulations complement government oversight by providing an additional layer of accountability specific to each sector. These regulations, often developed by industry associations and organizations, are tailored to address the unique challenges and risks associated with specific technologies. They help establish industry-wide standards, guidelines, and best practices that ensure consistency and reliability across various technological systems.

By participating in the development and implementation of these regulations, companies demonstrate their commitment to ensuring the safety and reliability of their products and services. Industry regulations also offer a level of reassurance to consumers and stakeholders, building trust in the integrity of the technologies being utilized.

In conclusion, both government regulations and industry standards are essential in preventing future technological disasters. Governments provide oversight and establish general guidelines, while industry organizations focus on developing tailored regulations specific to their respective fields. By working together and adhering to these regulations, governments and industries can create a safer and more resilient technological landscape for future generations.

Enforcing Compliance and Accountability

In this section, we will explore the importance of enforcing compliance and accountability in order to prevent future technological disasters. By implementing strict regulations and protocols, organizations can ensure that their systems and technologies meet the necessary standards for reliability and security.

- Establishing Clear Guidelines: Organizations must develop clear guidelines and policies regarding technological practices and standards. These guidelines should outline the necessary steps and measures to be taken in order to ensure compliance with industry regulations and best practices.

- Regular Audits and Assessments: Routine audits and assessments should be conducted to evaluate and monitor compliance with established guidelines. These audits can identify potential vulnerabilities or weaknesses in systems and technologies, allowing organizations to take proactive measures to mitigate risks.

- Implementing Robust Security Measures: Enforcing compliance requires the implementation of robust security measures, including firewalls, encryption protocols, and access control mechanisms. By ensuring that these measures are in place and regularly updated, organizations can minimize the risk of security breaches and unauthorized access.

- Training and Education: Organizations should invest in training and educating their employees about compliance requirements and the potential consequences of non-compliance. By increasing awareness and knowledge, employees can better understand their roles and responsibilities in maintaining compliance.

- Enforcing Consequences: To ensure accountability, organizations must establish consequences for non-compliance. This can include disciplinary actions, fines, or termination in severe cases. By enforcing consequences, organizations create a culture of accountability and encourage individuals to take compliance seriously.

- Collaboration with Regulatory Bodies: Collaborating with regulatory bodies can provide organizations with valuable insights and guidance on compliance requirements. By establishing strong partnerships and open lines of communication, organizations can stay updated on evolving regulations and take proactive steps to meet compliance standards.

Enforcing compliance and accountability is crucial in preventing future technological disasters. By adhering to regulatory standards, organizations can build a secure and reliable technological infrastructure, protecting both their own interests and the wider society from potential risks and disruptions.

Cross-Sector Collaboration for Global Solutions

In an ever-connected world, where technology permeates every aspect of our lives, it is crucial to adopt a collaborative approach in addressing global challenges. This cross-sector collaboration brings together diverse expertise, knowledge, and resources from different industries, resulting in innovative solutions that can prevent and mitigate potential technological disasters.

By fostering collaboration between sectors such as government, academia, and the private sector, we can harness the collective power of various stakeholders to address complex issues at a global scale. Through knowledge sharing, research collaboration, and joint initiatives, we can stay ahead of emerging threats and ensure the resilience of our technological infrastructure.

Collaboration across sectors also enables the pooling of resources and expertise, allowing for more comprehensive risk assessment and response strategies. When multiple perspectives are taken into consideration, it becomes easier to identify potential weaknesses and develop robust preventive measures. Moreover, collaboration promotes transparency and accountability, ensuring that all stakeholders are actively involved in the decision-making process.

Furthermore, cross-sector collaboration fosters innovation by promoting the exchange of ideas and best practices. The diverse backgrounds and experiences of individuals from different sectors can lead to outside-the-box thinking and creative problem-solving. By encouraging interdisciplinary approaches, we can develop cutting-edge technologies and systems that are resilient and adaptable to changing circumstances.

Ultimately, cross-sector collaboration is not only necessary to prevent future technological disasters but also to create a safer, more sustainable world. By working together, we can build a global network of expertise and resources that can effectively address the challenges of the modern world and ensure the continued advancement of technology without compromising the safety and well-being of society.

Building Resilient Technological Infrastructure

In the wake of the Y2K bug’s aftermath, it became evident that having a resilient technological infrastructure is essential to prevent future technological disasters. As technology continues to advance at a rapid pace, it is crucial to build systems and networks that can withstand potential challenges and disruptions.

One key aspect of building resilient technological infrastructure is the implementation of redundancy. Redundancy involves having backup systems and components in place to minimize downtime and mitigate the impact of failures. By having redundant servers, power supplies, and network connections, organizations can ensure that even if one component fails, the system can continue to operate smoothly.

Furthermore, disaster recovery planning plays a vital role in building resilience. Organizations must develop comprehensive strategies and protocols to respond effectively to various scenarios, including natural disasters, cyber-attacks, and technical failures. This includes regular backups, off-site storage of critical data, and well-documented procedures for system restoration.

Another crucial aspect to consider is the importance of regular system maintenance and updates. Technological infrastructure is constantly evolving, and outdated systems are more prone to vulnerabilities and exploits. Implementing regular system patches, security updates, and software upgrades can significantly reduce the risk of system failures and security breaches.

Additionally, organizations should invest in robust monitoring and early warning systems that can detect and address potential issues before they escalate into full-blown disasters. Proactive monitoring of network traffic, system performance, and security threats can help identify vulnerabilities and take corrective measures promptly.

Lastly, fostering a culture of continuous improvement and learning is essential for building resilient technological infrastructure. Learning from past incidents and sharing knowledge within the organization can enable teams to identify and address potential weaknesses in the system. Encouraging collaboration, regular training sessions, and incorporating technological advancements can enhance the overall resilience of the infrastructure.

| Key Takeaways: |

|---|

| – Redundancy is crucial to minimize downtime and mitigate failures. |

| – Disaster recovery planning ensures effective response to various scenarios. |

| – Regular system maintenance and updates reduce the risk of failures and breaches. |

| – Monitoring and early warning systems help detect and address potential issues. |

| – Fostering a culture of continuous improvement enhances overall resilience. |

Investing in Redundancy and Back-up Systems

Ensuring the stability and reliability of technological systems is crucial in preventing potential disasters. One way to achieve this is by investing in redundancy and back-up systems. These additional systems act as a safety net, providing alternative pathways and resources in the event of a failure or disruption.

Redundancy refers to the duplication or replication of critical components or entire systems. By having redundant systems in place, organizations can mitigate the impact of failures. This can involve duplicating hardware, software, or even entire networks. Redundancy ensures that if one component or system fails, there are others readily available to seamlessly take over operations.

Back-up systems, on the other hand, involve creating copies or backups of important data and files. This allows organizations to quickly recover and restore any lost or corrupted data. Back-up systems can be implemented on-site or off-site, with off-site solutions being particularly effective in the face of physical disasters that may impact an organization’s primary location.

Investing in redundancy and back-up systems requires careful planning and allocation of resources. It necessitates identifying critical components, assessing potential failure points, and implementing redundant alternatives. Additionally, organizations must establish effective backup strategies, including regular backups, data encryption, and off-site storage.

While investing in redundancy and back-up systems may incur initial costs, the long-term benefits far outweigh the expenses. These systems provide a safety net, ensuring the continuity of operations and minimizing downtime. They safeguard against the potential loss of crucial data and mitigate the impact of unforeseen events or technological failures.

In conclusion, investing in redundancy and back-up systems is a proactive measure to prevent future technological disasters. By duplicating critical components and creating backup copies of important data, organizations can mitigate the impact of failures and disruptions. This investment ensures the stability, reliability, and resilience of technological systems, ultimately safeguarding against potential disasters.

Questions and answers

What was the Y2K bug?

The Y2K bug refers to a programming problem that occurred in computer systems leading up to the new millennium. It was caused by the use of two-digit year values, which led to the fear that computer systems would interpret the year 2000 as 1900.

What were the consequences of the Y2K bug?

The consequences of the Y2K bug were largely anticipated and minimized before it could cause significant damage. However, some minor issues did occur, such as incorrect dates in documents and software glitches. These consequences were relatively small compared to the potential catastrophic outcomes that were initially feared.

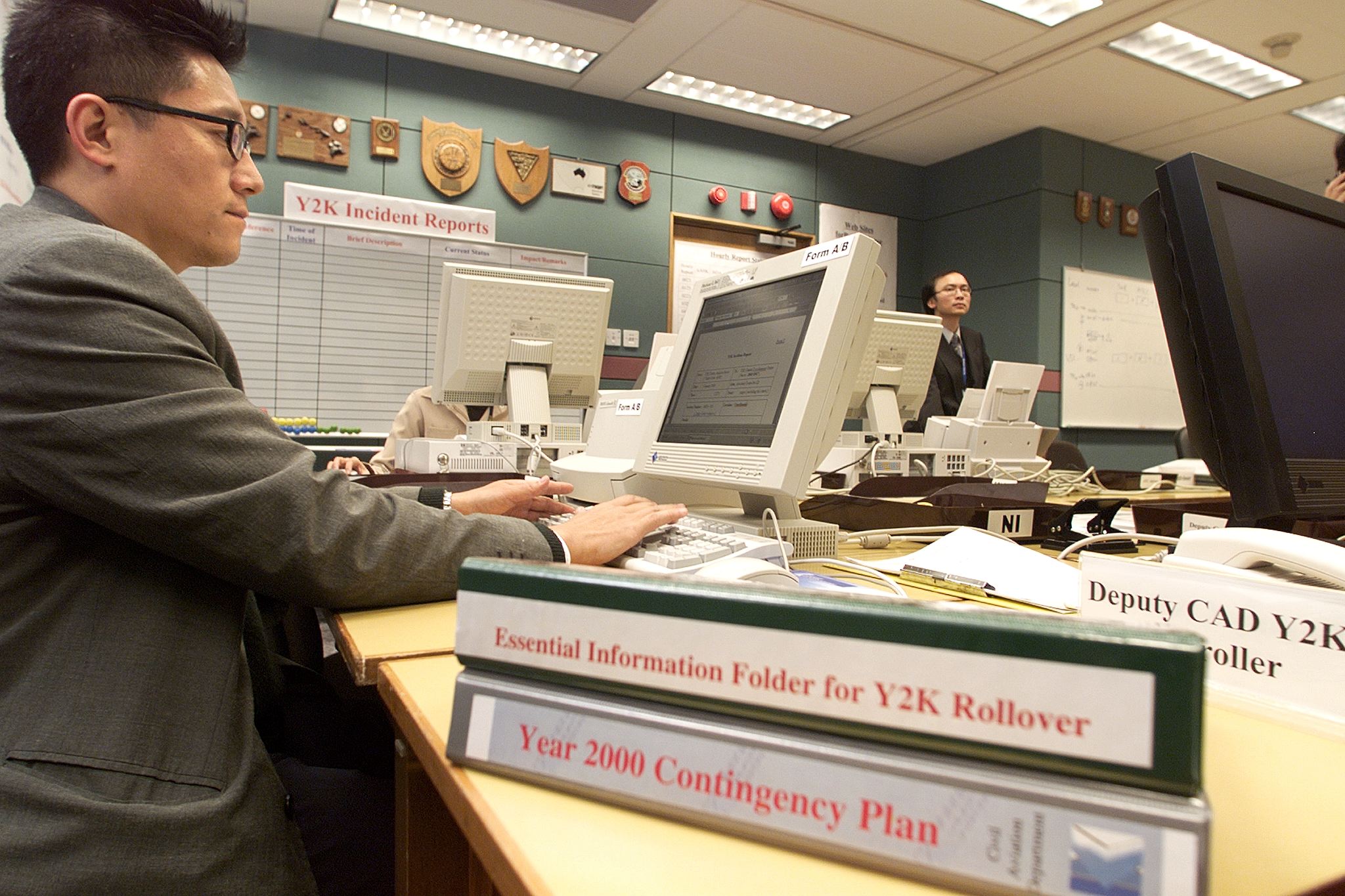

How was the Y2K bug prevented?

The Y2K bug was prevented through a global effort to identify and fix the affected computer systems. IT professionals and software developers worked tirelessly to update software and ensure that computer systems would recognize the year 2000 correctly. This involved extensive testing, debugging, and system upgrades.

What are the lessons learned from the Y2K bug?

There are several lessons learned from the Y2K bug. Firstly, it highlighted the importance of proper software development practices, such as using four-digit year values and thorough testing. Secondly, it emphasized the significance of proactive problem-solving and collaboration among professionals across various sectors. Lastly, it demonstrated the need for continuous monitoring and updates to ensure the long-term stability of computer systems.

Are there any similarities between the Y2K bug and potential future technological disasters?

While each technological disaster may have its unique characteristics, there are some similarities between the Y2K bug and potential future technological disasters. Both scenarios involve the potential malfunction or failure of computer systems on a large scale. Additionally, they underscore the importance of preemptive action, thorough planning, and cooperation among stakeholders to mitigate risks and prevent severe consequences.

What is the Y2K bug?

The Y2K bug, also known as the Year 2000 problem, was a computer bug that arose due to the two-digit year representation in computer systems. It was feared that when the calendar rolled over from December 31, 1999, to January 1, 2000, computer systems would interpret the year as 1900 instead of 2000, leading to potential errors and system failures.

What were the consequences of the Y2K bug?

The potential consequences of the Y2K bug were widespread and varied. It was feared that critical infrastructure systems such as power grids, telecommunications, financial institutions, and transportation systems would experience failures, leading to disruptions in everyday life. However, due to extensive preparations and fixes, the actual impact was significantly minimized.

How did the Y2K bug affect businesses?

The Y2K bug had a significant impact on businesses. Many organizations had to invest substantial time, resources, and money to ensure their computer systems were Y2K compliant. This involved updating software, replacing hardware, and conducting extensive testing to detect and fix any potential issues. The Y2K bug also raised awareness about the importance of robust technical systems and the need for proper contingency planning.

What lessons can be learned from the Y2K bug?

The Y2K bug taught several important lessons. Firstly, it highlighted the necessity of proper coding practices and the use of standardized date formats in computer systems. It also emphasized the importance of early detection and prevention of potential technological disasters. Additionally, the Y2K bug demonstrated the significance of collaboration and coordination between different sectors in tackling a large-scale technological issue.

Are there any similarities between the Y2K bug and potential future technological disasters?

While it is difficult to predict specific future technological disasters, similarities can be drawn between the Y2K bug and potential future issues. Both highlight the risks associated with outdated technology and the importance of proactively addressing them. They also underscore the need for continuous monitoring of systems, advanced planning, and effective communication to mitigate potential disasters.